Cosine Learning rate decay

In this post, I will show my learning rate decay implementation on Tensorflow Keras based on the cosine function.

One of the most difficult parameters to set while training any deep learning model is the learning rate. If it is a big value the weights of the model will begin to oscillate and they will have big changes, preventing the model from adjusting to the changes in the error and if the learning rate is too small, the training would be too slow, costing the model to learn and probable getting stuck in local minimum. Imagine that the learning process of the model behaves like a quadratic curve (“u”) and the learning rate as a ball. If the ball is very large, the model cannot advance from one point. There are multiple solutions to these problems. They exist methods for adapting the learning rate such as a step decay or more advanced methods such as an Adam or RmsProp.

In training deep networks, it is usually helpful to anneal the learning rate over time. Good intuition to have in mind is that with a high learning rate, the system contains too much kinetic energy and the parameter vector bounces around chaotically, unable to settle down into deeper, but narrower parts of the loss function. Knowing when to decay the learning rate can be tricky: Decay it slowly and you’ll be wasting computation bouncing around chaotically with little improvement for a long time. But decay it too aggressively and the system will cool too quickly, unable to reach the best position it can. ¹

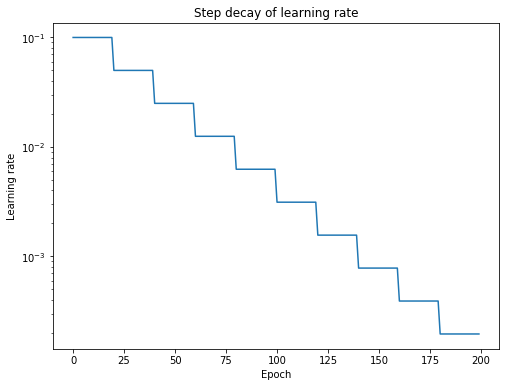

One of the most popular learning rate annealings is a step decay. Which is a very simple approximation where the learning rate is reduced by some percentage after a set of training epochs.

The approximation I want to show in this post is cosine decay with a warm-up. And as the name explains itself is based on the cosine function. To understand the implementation of this decay we need to understand several concepts.

The first concept is epoch warm up. In the training process, the first epochs trend to be the ones with the biggest error rate. This is because the weights of the model are being set randomly, although there are different ways to attack this problem like using trained weights for a similar task (fine-tuning) or using an initializer that still has the same problem but to a lesser extent. This is still a problem present in a classic deep learning problem and even more in a real case in production models or tasks. The warm-up consists of increasing the learning rate from 0 to its stipulated value by a factor during a certain number of times.

Then the training process will begin during a specific number of epochs to learn with the full learning rate after this will began the decay using the cosine function. We can use two approaches for the end of the training process, you can reduce the learning rate until it’s zero or until it gets to an epsilon value. I prefer the second one because in some cases, in the end, the model trend to get out of the local minimum, but this is more experimental than a strict rule.

This is the Keras implementation. For use, you just need to import the class and build it with the parameter that adapts to your case of study. Add this class object to the callbacks list in the training process.

For plotting the learning rate with Tensorboard you will need to create a class that inherits from TensorBoard and adds the learning rate optimizer to the plot this is the code in Keras.

I hope this could help. In my experience using cosine decay with a more advanced process like Adam improve significantly the learning process and help to avoid the local minimum. But this method has his own disadvantage, first, we don’t know what the optimal initial learning rate is, and in some cases decreasing our learning rate may lead to our network getting “stuck” in plateaus of the loss landscape. Another interesting approach is using cyclical Learning Rates that consist of allowing the learning rate to cyclically oscillate between two bounds.

My name is Sebastian Correa here is my web page if you wanna see more of my projects.

[1]: Karparthy’s CS231n notes,

http://cs231n.github.io/neural-networks-3/#annealing-the-learning-rate

[2]: Jeremy Jordan, Setting the learning rate of your neural network

https://www.jeremyjordan.me/nn-learning-rate/